Introduction

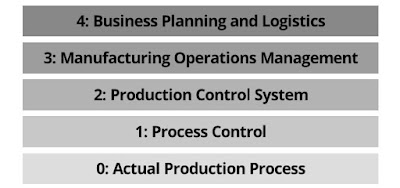

Manufacturing companies usually run a number of different software systems to monitor and control their operations from business aspects to their production systems. A fundamental concept to describe the different levels of control is captured in the functional hierarchy model. |

| Functional hierarchy model for a manufacturing enterprise, depicting the five hierarchy levels in industrial automation. |

This systems' separation leads to technical barriers. In order to overcome these barriers, international standards have been developed, such as the IEC 62264 series of standards that tries to harmonize levels 3 (MES) and 4 (ERP) and thus foster vertical integration.

In further steps, modern and smart manufacturing in production companies require deeply integrated IT systems to provide flexibility and rearrangement. Approaches like Industry 4.0 aim at flexible production network that additionally require horizontal integration across companies. Thus, there is also production related information exchanged in the network, which must be vertically forwarded to the corresponding service endpoints of the local production system.

To fulfill above requirements, two kinds of system integrations are required:

- Horizontal integration for the linking and seamless communication of systems in the network on the same hierarchy level.

- Vertical integration for the integration within one production system, from the business floor to shop floor. Vertical integration can go far beyond the manufacturing or management layers and down to programmable logic controllers and even single sensors. Practically all companies share the same vision for the future, automation and individualization of the complete manufacturing process, from product description, over order production to logistics and delivery. In order to create this vision in the real world, different business partners are required for executing specific processes, provide these capabilities in service and work with standardized horizontal and vertical information linking.

In this blog post, we will showcase the application of Model-Driven Engineering in the field of automated production systems, focusing on vertical integration by aligning AutomationML, IEC 62264 and B2MML. For example, a digitized shop floor is much more flexible and open to adaptations when digital standardized system models are used, rather than it has been the case in the past when required rapid adaptations in the business models were necessary to provide up-to-date service descriptions.

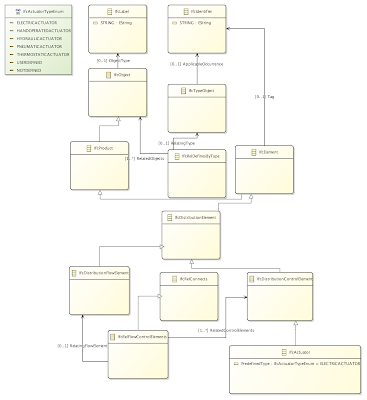

Our digital shop floor is encoded in XML-based AutomationML (AML), for the MES to ERP integration we are using IEC 62264 (specifically, its second part) and its XML serialization B2MML. The focus in the implementation is on transforming between these three standards and extracting information into persistent document files.

Implementation

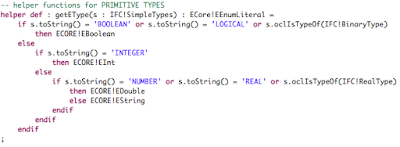

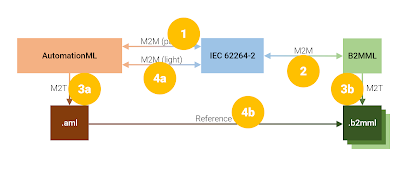

In this section we give an overview of the different transformation implementations between AutomationML, IEC 62264-2 and B2MML, based on the ATL Transformation Language for Model2Model (M2M) transformations and the Xtend Java dialect for Model2Text (M2T) transformations. |

Implementation tasks overview

|

- ATL M2M Transformation: AutomationML <-> IEC 62264-2 pure.

- ATL M2M Transformation: B2MML <-> IEC 62264-2.

-

Xtend M2T Transformations:

- 3.a AutomationML model to .aml document file.

- 3.b B2MML model to .b2mml document file.

-

Additional Tasks:

- 4.a ATL M2M Transformation: AutomationML <-> IEC 62264-2 light.

- 4.b Unidirectional cross references from .aml document files to .b2mml document files.